Pavel Larionov

Composite AI: the Art of AI collaboration

Introduction

The pace of AI breakthroughs nowadays might seem spontaneous, but it’s actually the result of very profound work of numerous research groups around the globe, developing algorithms and approaches. Every new feature and AI-powered product is a mix of those algorithms, chained together, manipulating large numbers and dimensions to create an animated image of a cute cat for us. Involving AI on every step of those processes is currently expensive and not always efficient, that is why a seamless integration of AI with traditional algorithms represents the necessity to balance the versatility of AI models with the robustness of classic computing. This way, the currently unstable nature of generative AI is balanced and double-checked, making the solution truly bullet-proof and production-ready. Those businesses and creatives who will understand what model to use where, what is a job for AI and what isn’t, will survive the age of disruptive AI.

In this exploration, we dive deep into the mechanisms and applications of the so-called Composite AI approach, drawing parallels with established methodologies and examining real-world implementations that showcase this synergistic approach.

OpenAI: Standing on the Shoulders of Giants

OpenAI is still on the forefront of the AI revolution. Their core team consists of highly talented and renowned machine learning specialists. That said, much of their technology relies on very sophisticated integration of foundational frameworks and algorithms, created by many companies and researchers throughout the past decades. Some examples:

- _Recurrent Neural Networks, RNNs, which represent one of two prominent architectures for neural networks, were first developed in the 1980s by several researchers working independently, probably most notably by CalTech University.

- _Autoregressive Transformers: the heart of OpenAI’s ChatGPT, which allows one to predict the next most possible piece of information based on previous input (that’s also how your autocomplete works), were introduced by Google in 2017.

- _GANs or generative adversarial networks, used for various generative tasks, were introduced by Ian Goodfellow in 2014 (he was researching it at the University of Montreal).

- _Diffusion models, which are able to restore images from noise based on principles of thermodynamics, were proposed by variety of institutions, e.g. Stanford University.

Those algorithms in their turn, are based on mathematical principles discovered sometimes centuries ago, as for example latent space conversion, which can be traced back to statistics works from the early 20th century.

Without any doubt, since it’s foundation, OpenAI created numerous algorithms and pipelines themselves:

- _GPT itself, or Generative Pre-trained Transformer series, which revolutionized context-aware text generation.

- _DALL-E – text-to-image neural network.

- _CLIP – Contrastive Language-Image Pre-Training – which bridges the gap between visual and textual data, allowing existence of multimodal models.

- _Codex – the core of Microsoft Copilot, a coding assistant trained on GitHub examples.

- _Whisper – speech recognition system.

- _Gym – a toolkit for developing and comparing reinforcement learning algorithms.

So by no means the progress we celebrate came out of nowhere. But we needed a company, which would be able to put all pieces of the puzzle in place.

The Most Recent Development: Sora by OpenAI

While ChatGPT platform exemplified how powerful technologies can be, when applied correctly, we are stepping into the next phase of the AI revolution with generative videos now, as OpenAI introduced Sora.

On the surface, Sora is yet another video creation platform, which we have seen throughout 2023 – Runway being one of the most prominent examples. Under the hood, OpenAI calls Sora “an important step towards AGI (artificial general intelligence)”. Let’s shortly discuss why.

Technologically, we see that Sora is built from those bits and pieces, which we have mentioned earlier:

- _Trained on large amounts of media files of various resolution, duration and aspect ratios, the model extracts so-called “patches” of information. In GPT, tokens play the same role.

- _Video generation happens one frame at a time based on stable diffusion (noise to image).

- _The flow of the video is glued together by providing constant re-capturing, first introduced in DALL-E, which makes the behind the scene storyline coherent and consistent.

The most fascinating thing about Sora is its emergent properties, which only appear when you apply those technologies on an enormous, internet-size scale.

We saw those emergent properties in GPT, when the model was able to derive answers based on context and “reason” on topics, which never came up in the body of the training data, which makes users (and some researchers and developers) believe that GPT actually can think.

Now we are about to witness a similar kind of excitement with generated videos, as in order to produce plausible videos, the model must be able to reproduce basic sequences of actions and reactions.

Examples of Using Composite AI Approach

Sora + Gaussian Splatting

We have started our outlook with the visions of Sora, the new OpenAI video generating algorithm. If you have read our previous article about spatial computing, you probably can recall the Gaussian splatting technique. If not – we encourage you to read it, but in short, Gaussian splatting is a method to convert a series of images of an object, made without any special equipment, into a 3D object, importable into 3D editors like Blender and further into game engines like Unreal or Unity. In the same article, we also made an example of how this technique can work by having screenshots from virtual worlds (e.g. recreating virtual objects from a video game) or using frames from a video (e.g. from drone) as input.

You can probably already guess what we are getting to.

On the video above:

- _left footage is the Sora video

- _right footage is a 3D Gaussian Splat generated from the video

Yes, you can combine Sora (which is itself a combination of different AI techniques) and another machine learning method – Gaussian splatting – to make 3D objects based on videos produced by Sora. So it’s a full-cycle digital production, which doesn’t require any photo-video equipment, doesn’t require any 3D modeling or sketching, and which can get you from having the craziest idea to implementation of that idea into a 3D object for your virtual experience or video game or anything in between.

Intelligent Configurator: AI meets E-Commerce

Recently we have created an intelligent customization tool for a company who produces pacifier chains. Let’s take this problem as an example and break it down in order to understand how we solved it.

_the problem

The configurator features lots of beads to select from. The buyers won’t want to scroll through them all and probably not everyone would know where to start.

_the idea

We could probably program some kind rule-based algorithm, which would have nested “ifs” and “elses”, while considering the color theory and asking the user multiple questions, but first, nobody likes answering questions in forms and second – we are innovation company, so why not use AI?

_the solution

So we decided to let users just enter their prompt freely in a field. And our AI would do the heavy lifting of coming up with beads, matching colors and trying it’s best to make it fit to the described child.

The inputs must not be exact (like colors or shapes). Buyers can vaguely describe what are the topics or interests they would like the chain to feature and the AI would match it to what’s available in the store.

_composite AI approach

First of all, we have processed the positions of the shop themselves with AI to bring it to a format we required.

Then we started implementing the front- and backend flow:

- First, the user request is sent to the backend and analyzed by the AI model

- On the backend, we process this request and go back and forth within the model to extract the details and guess the missing information – that’s mostly prompt engineering task

- The final response from the bot is formatted as JSON – it’s just a big text file full of parameters, containing programmatic requests to the traditional part of the algorithm. This dictionary is not exactly human-readable, so it is not what you typically receive from GPT, unless you specifically ask for it.

- This JSON-file is passed to the traditional part of the flow and processed by it – it picks beads in shape and color and fetches them all together in a very linear and predictable fashion. This way, we avoid situations when the AI-part came up with a chain we don’t have materials for.

- On the frontend, the user just sees the chain combined in real time.

The buyer can try again or edit certain parts of course. You can try it yourself here: schnullerkette.de

Conclusion

In conclusion, the composite AI approach has the potential to revolutionize various industries and domains. By combining the strengths of different AI technologies and traditional algorithms, we can create solutions that are more powerful, efficient, and accurate, while still being affordable. However, it is essential to have a good understanding of how AI technologies work, acquire hands-on experience in applying them, and realize their limitations. By leveraging the strengths of AI and compensating for its weaknesses, we can unlock new possibilities and drive innovation across multiple sectors.

At Hyperinteractive, we are lucky to possess all necessary components for a successful implementation of intelligent solutions of high complexity. We have various solutions in our portfolio, for example Dot Go – an app for visually impaired or our relationship coach Eric AI.

Your business also needs AI? Let’s talk!

related projects

We are working on intelligent solutions since 2017. We are experienced with custom solutions as well as all the tools AI. These are just three example cases.

explore the topic? You have own ideas?

reach out to usexploring the frontier: ethical innovation and the future of technology

In a recent gathering of minds at the Innovation Hour, technology enthusiasts and experts embarked on a journey through the labyrinth of modern technological advancements and the ethical quandaries they present. The session, titled “Exploring the Frontier: Ethical Innovation and the Future of Technology,” served as a microcosm of the larger dialogue happening at the intersection of technology and society.

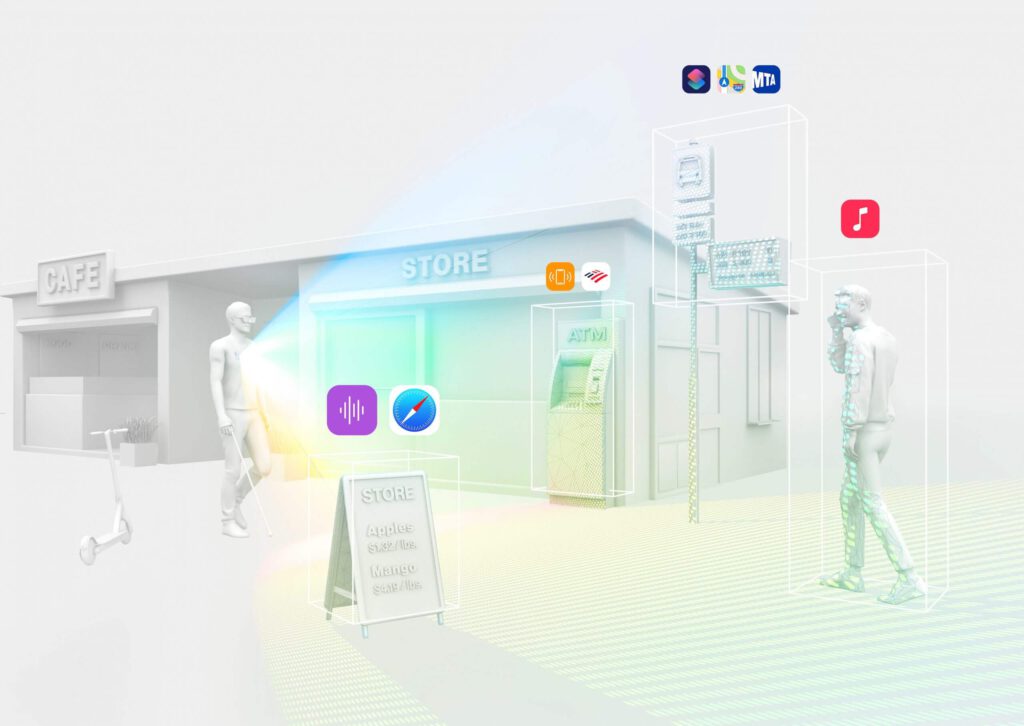

enhanced digital interaction

A significant portion of the discourse centered around the evolution of digital interfaces, particularly advanced browsers. These tools, once mere gateways to the internet, are now becoming sophisticated platforms that aggregate and curate content in real-time, tailored to individual needs. This shift towards intuitive, efficient, and personalized digital experiences marks a pivotal change in how we interact with the vast expanse of the internet. It suggests a future where technology anticipates our needs, offering solutions before we even articulate them.

the ethical fabric of AI

As the conversation meandered through the technological landscape, it paused at the ethical implications of artificial intelligence. AI, with its vast potential to revolutionize every aspect of human life, poses profound ethical dilemmas. From the development of Neuralink-like devices to AI’s role in personal and societal decisions, the discussion illuminated the need for a balanced approach. The consensus was clear: innovation should not only push the boundaries of what technology can achieve but also consider the moral, ethical, and societal impacts of these advancements.

transformative entertainment technologies

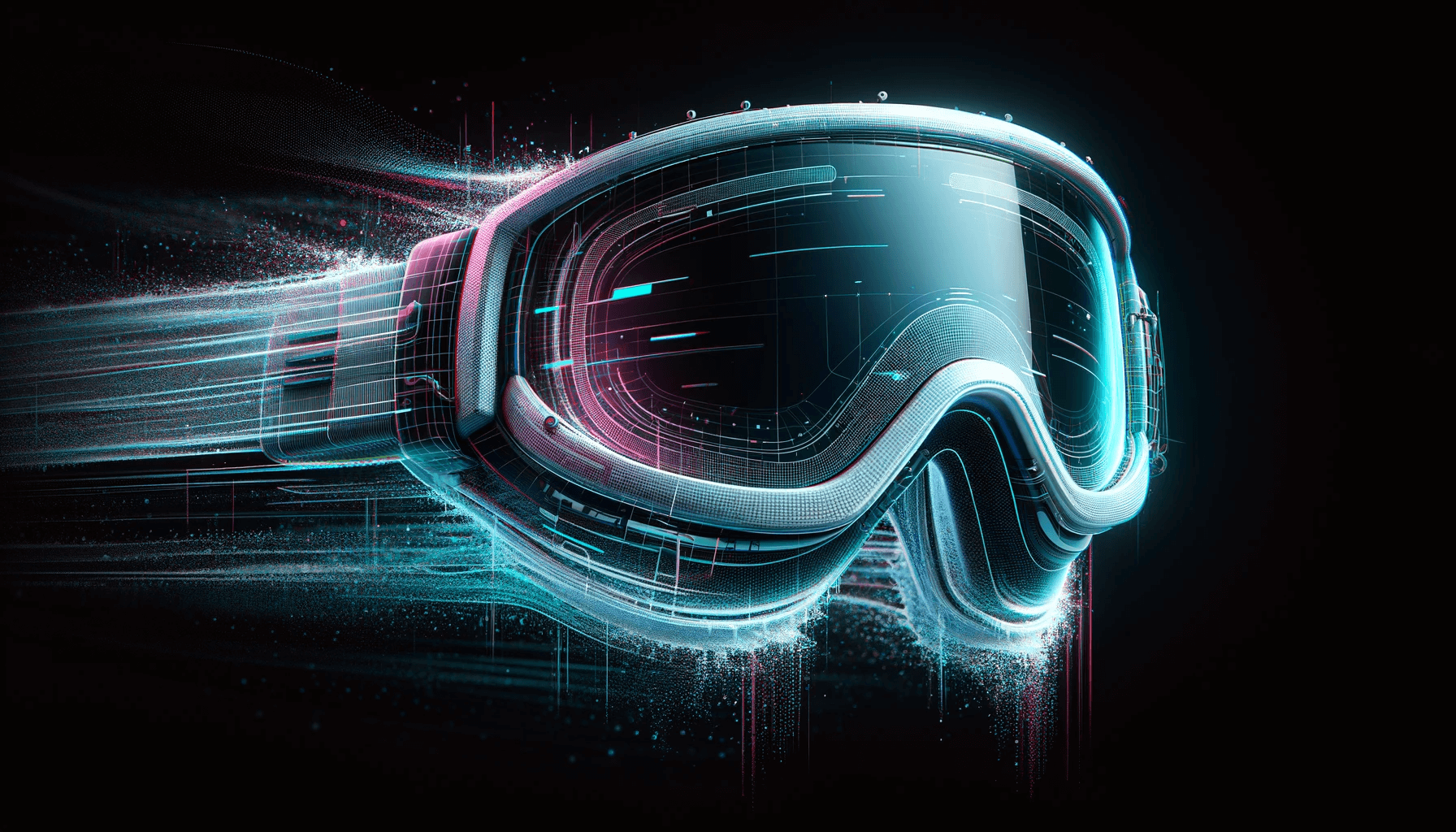

The dialogue ventured into how technology is reshaping entertainment, particularly through VR and AR. The immersive experiences offered by these technologies provide a glimpse into a future where entertainment goes beyond passive consumption, becoming a fully interactive and engaging experience. This transformation has the potential to redefine not only how we consume content but also how we interact with the world around us, blurring the lines between reality and digital creation.

the broader societal impact

Beyond the specifics of browsers, AI, and entertainment technology, the session touched on the broader implications of these innovations. The conversations explored how technology could serve as a force for good, addressing global challenges and improving quality of life. However, it also acknowledged the potential pitfalls, from privacy concerns to the digital divide, underscoring the importance of thoughtful and inclusive technological development.

looking forward

The Innovation Hour proved to be more than just a discussion on technology; it was a reflection on the trajectory of human progress. As we stand on the brink of significant technological shifts, the insights from this session serve as a reminder of the dual responsibility that comes with innovation: to advance and to protect.

In essence, “Navigating the New” encapsulates a moment in time where technology’s potential is limitless, but its direction is guided by thoughtful consideration of its impact. It’s a call to action for innovators, thinkers, and society at large to engage in the dialogue that shapes our future, ensuring that as we move forward, we do so with intention, ethics, and humanity at the forefront.

take a look at our innovation cases

interested to join the next innovation hour?

reach out to us

Reimagining Offline Experiences with Spatial Computing

Introduction

The anticipation surrounding Apple’s forthcoming spatial computing glasses highlights a possible pivotal moment in digital innovation. Apple themselves see the introduction of the glasses as an “iPhone moment”, based on the fact that they referenced the very first iPhone commercial about phones, but this time with helmets. Time will tell whether spatial computing is here to stay or will join 3D TV technology. As digital innovators and tireless explorers, it’s our obligation to try this technology hands-on in order to figure out what is possible. Let’s figure out what spatial computing is, what technologies we can use to build experiences for it, and how we envision the perception of it.

What is Spatial Computing

Spatial computing, which merges the digital and physical realms, is the beginning of the transformation of our perception of reality. It’s a multi-faceted concept, encompassing augmented reality (AR) and virtual reality (VR), but it’s closest to mixed reality (MR). Spatial computing allows for interaction with digital content in three-dimensional space, creating immersive experiences that integrate with our physical environment.

Let’s brush up on terms and recall major actors in all technological spheres.

Augmented reality

In augmented reality, the real world is dominant; virtual objects serve to highlight objects or provide context information. So the user can interact with the real environment or surrounding individuals on a deeper level of expertise or in a more entertaining way. The primary use cases for augmented reality are professional services – engineering or healthcare being probably the most notable fields.

The primary use cases for augmented reality are professional services – engineering or healthcare being probably the most notable fields. Think of fixing complex machinery like an MRI device, which is usually unmaintainable by a solo individual because of the sheer complexity of the device, or about live assistance during operation, displaying the temperature of tissues, patient’s pulse – all without the need to ask anyone or turning your head around.

The main advantage of augmented reality – perception of the world is not altered by any additional processing, as typically AR is projected on a transparent surface, like glasses or similar. The main disadvantage lies in the challenge of bringing the high-quality image onto a transparent surface.

Which Perceptions of Reality can we Augment

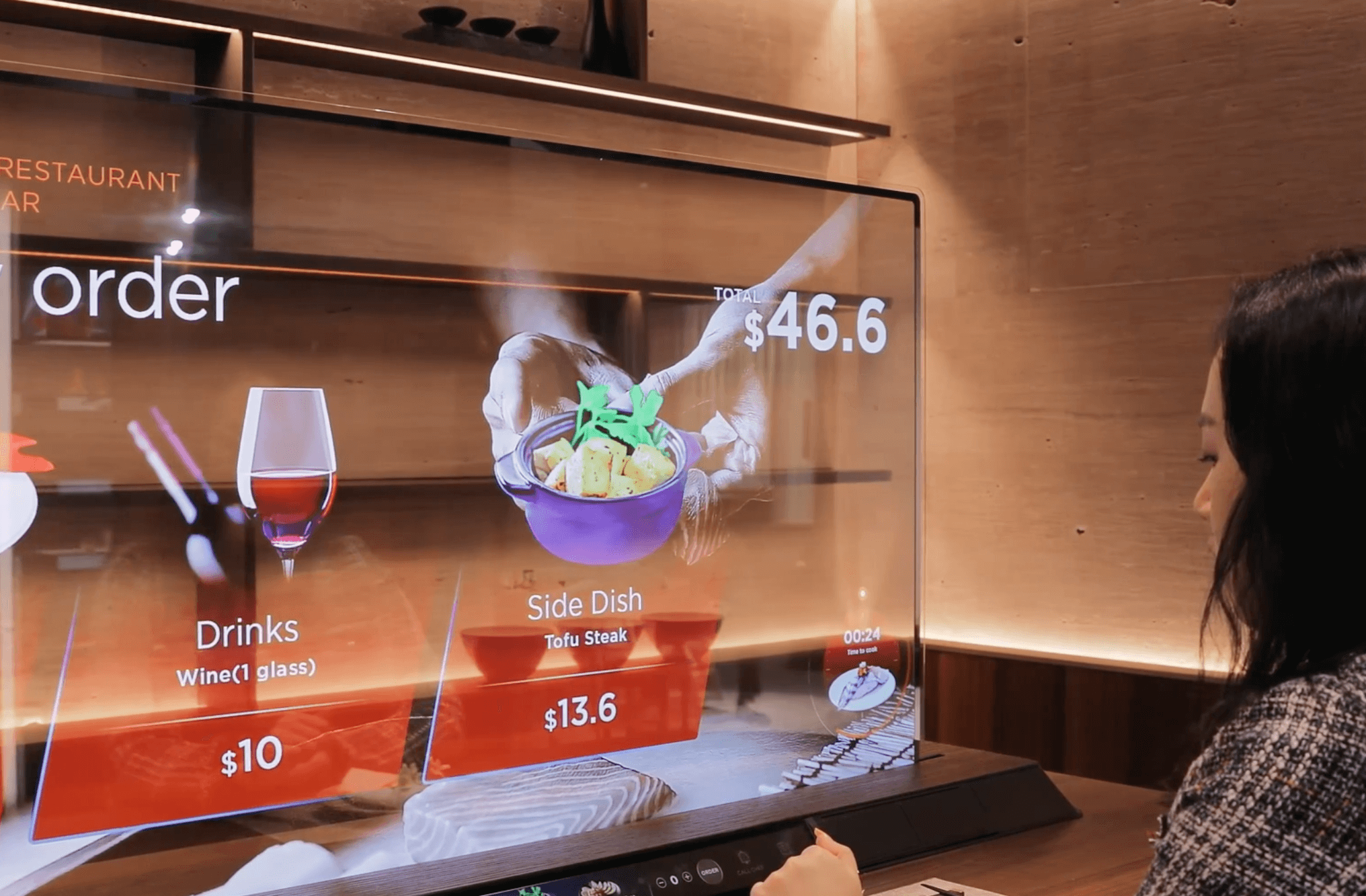

Augmented reality is thought about from the perspective of personal wearable devices. But that is not the only scenario where we can use augmented reality. With the latest advancements in transparent screen and projection mapping, we will be able to see stationary devices, seamlessly blending into the environment. You probably all have seen the latest transparent TVs by LG announced during the CES 2024. Many consumers tend to question the real value of such devices; they often forget that the main selling point is not necessarily home TVs but commercial areas.

Another way to augment reality is by using audio. We have already been doing this for years when we listen to podcasts on the go or speak on the phone in wireless headphones. Current advancement in AI opens whole new possibilities to weave audio into our daily lives.

Meta is pioneering this direction with their Meta Ray Ban Smart Glasses. These specs can not only take candid pictures and videos of everything you see from your personal perspective (which already opens up many creative possibilities), they also potentially can give you explanations of what you see or how to interact with certain objects. It’s amazing how much you can do without even showing any visuals at all.

Virtual reality

On the opposite side of the spectrum is virtual reality – in which the virtual world is the only reality the user is supposed to be experiencing. The real world fades and disappears, and digital entertainment starts to reign. Video games are the main driver of the VR industry, with titles like Beat Saber or Half-Life Alyx leading the way.

Video games are the main driver of the VR industry. You probably heard about the biggest hits like Beat Saber or Half-Life Alyx. Both titles excel in their own way – Beat Saber being highly entertaining and enormously fun, and Half-Life Alyx being deep and created with huge attention to details. Users get very creative there, e.g., one math teacher held a lecture in the game, I bet students were amazed.

The technology is not flawless, though: VR helmets are usually rather heavy and not comfortable for prolonged sessions, devices struggle to be standalone and often cause motion sickness because of perception mismatch. The latter happens because of brain confusion – no sensory information about motion from the body while being convinced otherwise by the visuals. As in AR, where we still see the surroundings, this effect is not present in AR glasses.

People who never tried VR glasses tend to think that it’s nothing more than a regular screen which is uncomfortably close to your face, but that is not entirely true. The brain is really eager to be fooled, and as studies show (pain treatment with VR https://www.health.harvard.edu/pain/virtual-reality-for-chronic-pain-relief), even after a short time, people tend to forget that the reality they see is virtual.

We would highly encourage anyone to try an appropriate VR helmet. We’ve brought the metaverse to Serviceplan headquarters once, and it was so much fun opening this world up to people who never experienced anything like this.

Why Spatial Computing Pretends to be Different

Spatial computing is not only marketing which Apple traditionally gracefully applies. New Vision Pro glasses might be different because of several reasons.

1_High-quality picture

A helmet made by Apple is expensive no doubt, but most probably will deliver a superb picture quality. Apple values good picture and audio quality, and there is no reason for this product to underdeliver.

2_Best of both worlds

Vision Pro can act as AR or as VR or as something in between, based on what we have seen so far.

3_Apple Ecosystem

All glasses which currently exist do not belong to any ecosystem. We can imagine all the convenience features like simple screensharing or airdrop to function, truly merging not only realities but also digital environments existing on different devices. In fact, Apple already takes advantage of having devices out there – iPhones 15 Pro can film in Spatial format, which can be exclusively viewed in VR on Vision Pro.

4_Developers

Or as Steve Balmer would say – developers developers developers developers developers developers. By doing what they are doing for years, Apple earned the trust of a whole army of developers, who are creating magnificent apps for iOS and MacOS. We can expect at least part of them to start working on Spatial computing software, including professional applications.

5_Apple Partners

Apple changed the music industry by gathering major labels under the iTunes roof. We can see a similar situation repeat with Apple TV already starting to stream sports events and Apple’s acquisition of NextVR – a company that broadcasts sports and music events in VR.

So as you can see, there are several reasons why we are keeping an eye on the launch of the Vision Pro and the whole thing with spatial computing. I hope after reading this you will as well.

Recent Advances in Reality Creation Techniques

But enough about Apple, let’s take a look at some advanced stuff and discuss how the new advancements in reality composing tools can benefit the process of creating our new reality, for example, Gaussian splatting.

The most important thing to know about Gaussian Splatting: it is a reality capturing technique that can create a realistic 3D scene with as little as 20 images! Of course, the more you add the better it will be, with 2000 images you can create truly breathtaking things with just about any camera (or even without).

3D Gaussian Splatting is a method used to create realistic 3D scenes from a small set of 2D images. It involves estimating a 3D point cloud from these images and then representing each point as a Gaussian, a kind of blurred spot. These Gaussians are characterized by their position, size, color, and transparency and are then layered on top of each other on the screen to build up the scene. The process includes a training phase where the system learns to adjust the Gaussians for the best visual quality.

These advancements not only represent technical breakthroughs but also democratize content creation, enabling more creators to build complex, realistic virtual environments.

More examples can be seen at poly.cam. If you click on any of the scenes, you will see how many frames (images) were used to recreate the scene in the details.

And one more thing (to think about). Nothing is stopping you from making screenshots in a video game or any other virtual environment and then recreating this scene for your own satisfaction or any other (legal) purposes.

Augmenting Offline Experiences

If we merge both topics we have discussed – playback in the new class of devices and 3D capture techniques – we can change the game for experiences traditionally meant to be visited in person and only in very specific locations, such as museums. By augmenting existing spaces or creating entirely new ones with the tools at our disposal with a creative spark, we can truly play with reality, adding dimensions and introducing perspectives that have never been explored before.

Hyperinteractive: Always Running On the Bleeding Edge

At Hyperinteractive, our commitment to staying ahead of technology involves actively engaging with cutting-edge technologies. Our team tirelessly explores advanced techniques, experiments, and creates prototypes, ensuring we utilize the most sophisticated methods to create deeply immersive and engaging digital experiences.

In fact, we have already created several museum-like experiences for our clients. One of the latest, the iii Museum, an immersive experience for Iranian artists, won several noteworthy international awards (Red Dot, Cresta).

Do you want to unlock additional dimensions for your business or experiences? Let’s talk!

related projects

We’re working on intelligent solutions since 2017. We are experienced with custom solutions as well as all the tools AI. These are just three example cases.

explore the topic? You have own ideas?

reach out to us

Beyond Centralized Social Media: How Open Protocols are Reshaping Internet

Introduction

The last few years have been marked by several changes in the social media landscape: Twitter’s acquisition by Elon Musk and its rebranding to 𝕏, Threads announced by Meta, and Jack Dorsey, founder of Twitter, creating BlueSky (which is now available for everyone without invite codes). While some major players want to preserve control and provide service single-handedly, others are embracing the decentralized approach. This latter fact has already begun to make waves across the industry, beyond social media.

What does WordPress have to do with decentralized media? What is the Fediverse in the first place? Why do major tech giants like Meta consider adopting a decentralized approach? Let’s find out.

Understanding the Fediverse

Let’s start with the most confusing term, because it’s crucial for understanding what is happening – the Fediverse – which is short for “federated universe.”

The Fediverse is a network of independent servers (also called instances) that store and serve content to users. Users have a choice of which server to join, each with a certain set of rules. So, there is no single organization that decides for everyone; instead, users are able to choose an instance with a suitable set of rules, while still being able to interact with people on different servers.

The servers across the Fediverse offer diverse web services, including social networks and blogs, and communicate through protocols like ActivityPub or the AT Protocol. This unique architecture empowers users with data control while ensuring a cohesive social media experience.

A popular misconception about decentralized protocols is that they are based on blockchain. While this can be technically achieved, in the case of the Fediverse, we are not talking about blockchain as no popular protocols are based on blockchain.

Congratulations, you now know more about the Fediverse than the majority of people on Earth.

The Role of ActivityPub

Now that we know what the Fediverse is and isn’t, let’s dig deeper into how it works with an example of ActivityPub. It will get a little technical, but bear with us as this knowledge will provide you with a great understanding and hence more profound decision-making.

ActivityPub is currently the most popular protocol in the Fediverse. It uses a JSON-based structure for data representation and operates on two levels: Client to Server (C2S) and Server to Server (S2S). As you might have guessed, C2S interaction allows users to engage with their servers, while S2S facilitates inter-server communication, enabling a federated network. ActivityPub categorizes entities as “actors” (like user accounts) and “objects” (like posts), with activities generated based on interactions between these entities.

Bluesky and the AT Protocol

But humanity can’t just have a one-size-fits-all solution, as we have free will, which pushes us to have our own set of priorities – and that’s okay. This is how another decentralized protocol emerged – the AT Protocol, created by Bluesky.

Bluesky, a decentralized social app conceptualized by former Twitter CEO Jack Dorsey, is behind the creation of the AT Protocol. The service was operating on an invite code basis until very recently; now, registration is open for everyone, and the process of getting onboarded is very straightforward. The AT Protocol, which powers Bluesky, offers account portability (a feature lacking in ActivityPub), which means that users can seamlessly migrate across different platforms within the network, maintaining their followers, data, and identities. Another prominent feature differentiating the AT Protocol from the competition is the ability to create custom feeds with pre-programmed rules. For example, users can create a custom feed of people they follow but only see posts with images. These feeds can be shared, so to stay on the previous example – anyone who is adding this custom feed to their account will see the feed of people the user is following, so the content in those feeds can be dynamic based on the user. This is differentiating the custom feeds on AT Protocol from the user-created lists on 𝕏 platform, which display the same content for all users who follow the list.

The fact that another protocol was created reflects a growing enthusiasm for decentralized models in social media.

Comparing Decentralized Fediverse Protocols: ActivityPub vs AT Protocol

While ActivityPub has established itself as the standard in the Fediverse, the emergence of the AT Protocol introduces new capabilities, particularly in portability and user autonomy. However, the long-term impact and adoption of the AT Protocol in the broader landscape of decentralized social media are yet to be fully realized.

As we are all about facts and being on-point, here is a high-level comparison of features.

– Main Purpose:

AT Protocol: Customizable, federated protocol.

ActivityPub: Federated social networking.

– Account Portability:

AT Protocol: High emphasis on portability.

ActivityPub: Limited portability.

– Feed Customization:

AT Protocol: Highly customizable feeds, allowing complex, tailored feed structures.

ActivityPub: Standardized feed structures with limited customization options.

– Data Management:

AT Protocol: Implements signed data repositories and Decentralized Identifiers (DIDs) for enhanced security and identity management.

ActivityPub: Standardized approach, less complex.

– Customization:

AT Protocol: Highly flexible for complex requirements.

ActivityPub: Basic, standardized customization.

– Discovery:

AT Protocol: Large-scale search and discovery.

ActivityPub: Hashtag-style discovery.

– Technology Base:

Both are federated, not blockchain-based.

It seems like the AT Protocol is more technologically advanced than ActivityPub, which is to be expected as the AT Protocol is younger and could learn from its competitors’ mistakes. But time will tell which protocol will grab the most attention, as adoption is what matters most in the end.

Who’s Adopting the Fediverse?

As words do not exist without meanings, technologies can’t thrive without real-life products based on them. So, let’s talk about which services are using these decentralized technologies so that we can recognize a Fediverse when we see one.

Mastodon: Redefining Social Media

Mastodon stands as a prime example of the Fediverse’s principles, offering an alternative to mainstream social media with user-centric control.

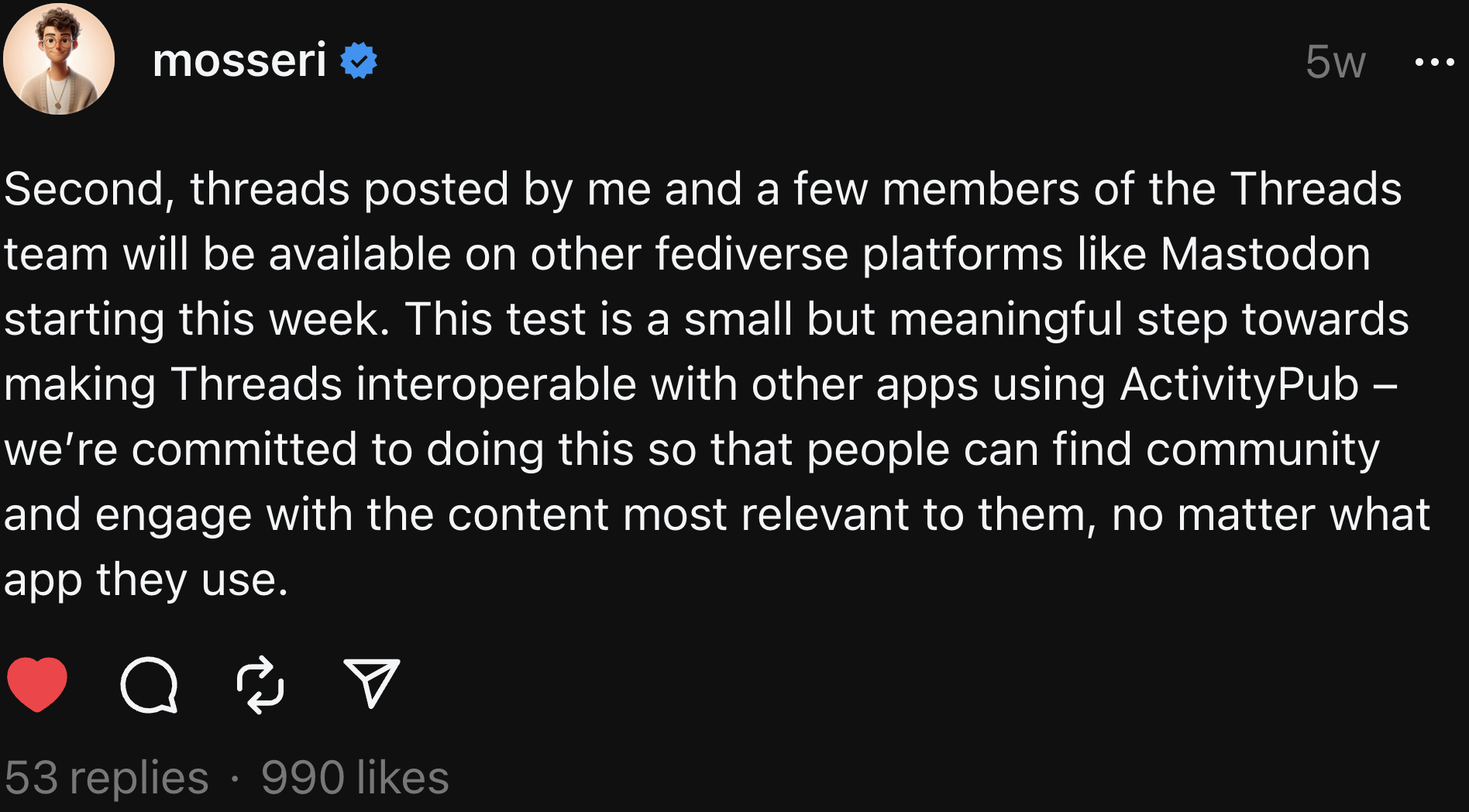

Meta’s Leap into Decentralization

Meta’s announcement to integrate Threads with Mastodon reflects a significant industry shift towards decentralized protocols. While this process has not finished yet, it has definitely begun.

Currently, only selected accounts from Threads are visible on Mastodon, and they show as having the @threads.net instance on accounts, like Adam Mosseri (head of Instagram at Meta), who posted this:

And here is how the same post looks on Mastodon:

WordPress: Joining the Decentralized Wave

Automattic, the company behind WordPress, also announced their intention to join the Fediverse.

WordPress.com, through its integration with the ActivityPub plugin, has bridged the gap between traditional blogging and the Fediverse. It might seem surprising for a rather conservative CMS provider to make such a bold move, but this enables WordPress users to seamlessly connect their blogs to a wider range of federated platforms, allowing content sharing and interaction across diverse social networks.

Basically, it makes content hosted on millions of WordPress-based websites (the company claims to power 40% of all websites on the internet) much more visible and interactive than ever before. This development marks a significant step in enhancing cross-platform engagement and content visibility in the decentralized web space.

Why are Major Tech Giants Interested in Decentralization of their Services?

WordPress’s integration with ActivityPub and Meta’s exploration of the Fediverse represent strategic responses to the evolving digital landscape. But why would they? On the surface, these moves seem to aim to enhance user engagement and content reach naturally and regardless of the platform users are selecting. Then, they might be experiencing the urge to adapt to the trend towards decentralized networks, as more users demand transparency and liquidity in the social media domain. Besides that, corporations are facing growing demands for data control and privacy from both the user base and regulators; this step might be preventative to future-proof their existence as corporations might be concerned that regulators will force them to open their algorithms on uncomfortable terms.

The consideration of the network effect might be another significant factor for joining the open protocol. By definition, the network effect creates a cycle of growth and value addition, where each new user enhances the platform’s value not just for themselves but for all existing users. Now, the network effect can be applied at higher abstraction levels – to the whole social media service as part of the Fediverse: with every new service joining the Fediverse, more services will consider joining, and, consequentially, more users will be more inclined to join this larger community, rather than to participate in a closed-source walled garden.

In conclusion, by embracing open-source, federated models, tech giants are positioning themselves to leverage future technological developments and diversify social media interactions. This shift indicates a recognition of the value in community-driven innovation and the need to stay relevant in a rapidly changing online world.

Hyperinteractive’s Experience with WordPress

At Hyperinteractive, we have extensive experience with WordPress, having created innovative digital experiences for a diverse range of clients. Take our latest project – an innovative adaptive website for a groundbreaking company voraus robotik – in which we merged traditional CMS with the most advanced technology of our time – AI.

Our expertise positions us to explore the potential of WordPress in this new decentralized landscape. You want to discuss even more exciting possibilities? Let’s talk!

related projects

We are working on intelligent solutions since 2017. We are experienced with custom solutions as well as all the tools AI. These are just three example cases.

explore the topic? You have own ideas?

reach out to us

Apple_Ring shaped devices

Eight months ago, I shared insights into Apple’s initial push into ring-shaped wearable devices. Today, I’m thrilled to report significant advancements in this domain!

just in

Apple’s expanding its wearable technology with a new line of ring-shaped devices – not just for fingers, but for the whole body! Think smart rings, bracelets, and even necklaces.

why it matters

This isn’t just about gadgets. It’s about redefining how we interact with technology – hands-free control, health monitoring, and enhanced security, all in a sleek, wearable form.

my thoughts

I’m thrilled to see where this goes, especially for screenless and mixed reality applications it allows for more accurate and seamless UX. Apple’s pushing boundaries again, and it’s exciting to be a witness!

related projects

We are working on immersive VR and AR experiences since 2017. These are just three example cases.

explore the topic? You have own ideas?

reach out to us

Navigating the Future: Top 5 Tech Trends for 2024

Introduction

The digital world is like a river – always moving, always changing. As we ride these currents at Hyperinteractive, it’s crucial to keep an eye on the horizon. What’s coming up in 2024? Well, we’ve scoured predictions from Forrester, G2, and Dynatrace, sprinkled it with our hands-on experience and in result are ready to bring you the top five trends that we think are not just noteworthy but critical for our journey ahead.

Trend 1: Composite approaches to AI

Remember when AI was just a fancy term for a smart algorithm that could beat you at chess? Those days are long gone. Now, we’re looking at a composite approach to AI. It’s like making a gourmet dish – you (usually) can’t just rely on one ingredient. In 2024, the blend of different AI models with traditional algorithms will be the secret sauce for digital innovation.

For us at Hyperinteractive, this means we’re going to combine different AI flavors to cook up more precise and context-aware solutions for our partners. This composite AI approach is like having a master chef and a sous-chef in the kitchen; one brings creativity while the other adds precision and context.

Trend 2: AI-generated code & digital immune systems

AI is stepping into coding, and we’re not talking about just fixing typos. AI-generated code is becoming a reality, but there’s a catch – who’s going to understand this code down the line?

2024 might be the year when the digital immune system will be born. Think of it as the bodyguard of your software, ensuring everything stays resilient and robust. At Hyperinteractive, we are using AI coding assistants in a limited way, as we value quality over quantity. With an automated check-up system we would have a mighty ally on our side, allowing us to use AI coding copilot beyond the scope of rapid prototyping.

Trend 3: Chief AI Officer role

AI’s getting so big that it now demands its seat at the executive table. The Chief AI Officer is set to become a thing in 2024. It’s like having a diplomat for AI in a company, ensuring that this powerful tool is used wisely and ethically.

This trend underlines our belief that AI isn’t just a tool; it’s a partner in our creative journey, one that needs guidance and watchdogs. At Hyperinteractive, we’re all about using AI responsibly and creatively. So if you don’t have a CAIO role in your company yet, we can help you build a vision and help you understand what is possible.

Trend 4: Data observability & decision making

Data is the new oil, they say, but it’s also a bit like a wild garden – it needs to be tended and understood. Data observability will become a must-have in 2024, enabling businesses to make smarter decisions, faster.

For us, this means enhancing our ability to understand the intricate tapestry of data that our projects weave. It’s about ensuring that every decision, every strategy is backed by solid, reliable data. This isn’t just number-crunching; it’s about turning data into digital wisdom.

Trend 5: Automation & its expanding Role

Automation isn’t new, but it’s getting a major upgrade. Thanks to advances in generative AI, like those triggered by OpenAI’s ChatGPT, automation in 2024 will be like going from being an expensive sports car to a Volkswagen Golf.

At Hyperinteractive, this translates to streamlining processes like never before – both internally and for our partners. It means giving our teams more room to focus on creativity and innovation while the mundane tasks are handled by our automated buddies. We’re not replacing human ingenuity; we’re supercharging it.

Conclusion

So there you have it – a sneak peek into 2024 through the lens of Hyperinteractive. These trends aren’t just blips on our radar; they’re the beacons guiding us forward. As we navigate the exciting waters of digital innovation, we’re not just following the current – we’re setting the course. Join us on this journey, and let’s see what amazing destinations we can reach together.

related projects

We are working on intelligent solutions since 2017. We are experienced with custom solutions as well as all the tools AI. These are just three example cases.